Alternative Computing Technologies (ACT) Lab

School of Computer Science

Georgia Institute of Technology

Bit Fusion builds upon the algorithmic insight that bitwidth of operations in DNNs can be reduced without compromising their classification accuracy. However, to prevent loss of accuracy, the bitwidth varies significantly across DNNs and it may even be adjusted for each layer individually. To leverage this opportunity, our ISCA 2018 paper introduces dynamic bit-level fusion/decomposition as a new dimension in the design of DNN accelerators. Bit Fusion is one incarnation of bit-level composable architectures, that constitutes an array of bit-level processing elements that dynamically fuse to match the bitwidth of individual DNN layers. This flexibility in the architecture enables minimizing the computation and the communication at the finest granularity possible with no loss in accuracy.

Citation

If you use this work, kindly cite our paper (PDF, Bibtex) published in The 45th International Symposium on Computer Architecture (ISCA), 2018.Sharma, Hardik, Jongse Park, Naveen Suda, Liangzhen Lai, Benson Chau, Vikas Chandra, and Hadi Esmaeilzadeh. "Bit Fusion: Bit-Level Dynamically Composable Architecture for Accelerating Deep Neural Networks", ISCA 2018.Bit-Level Composable Architecture

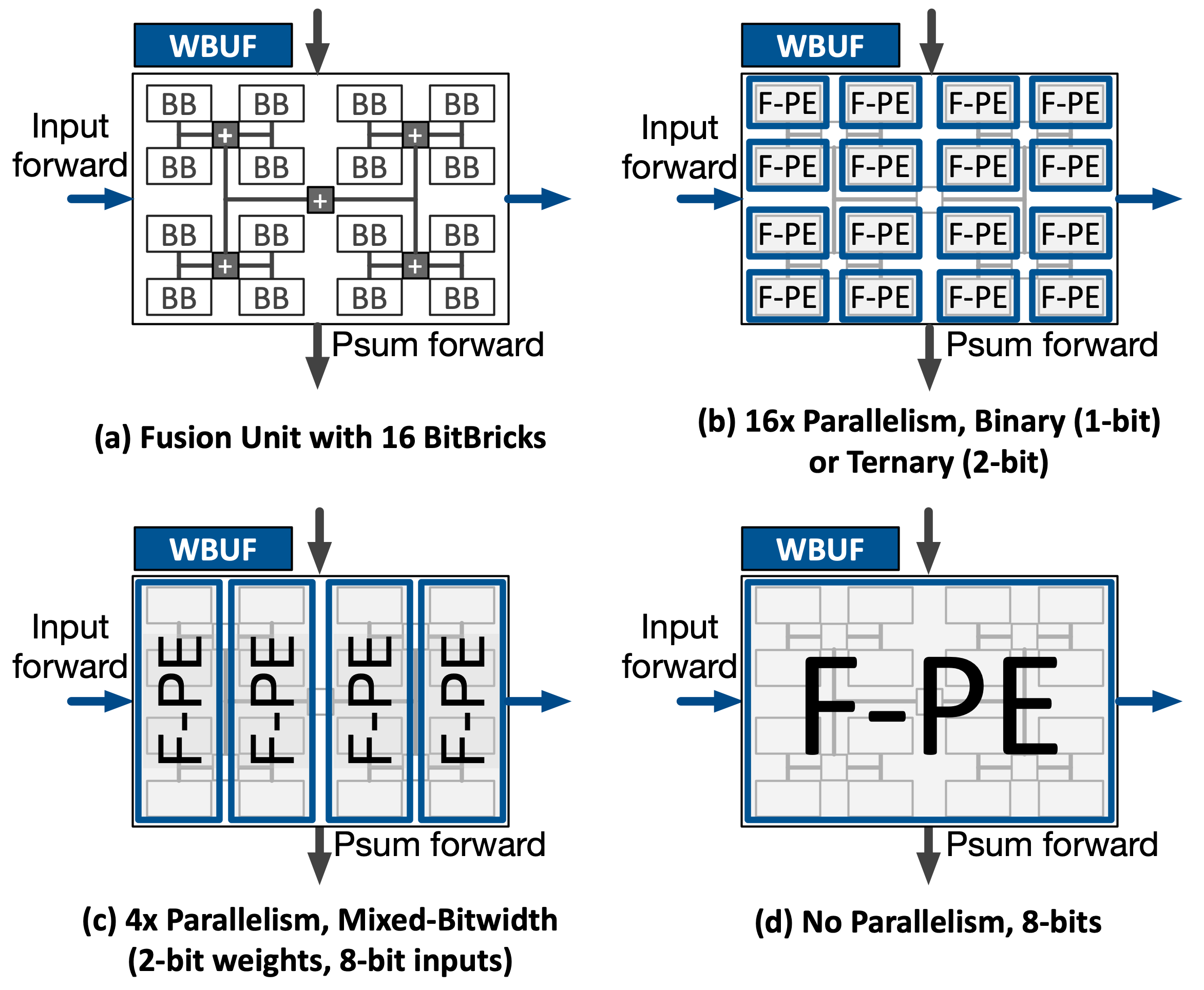

The basic building block in the Bit Fusion architecture is a BitBrick (BB), that can perform binary (0, +1) and ternary operations (-1, 0, +1). Bit Fusion arranges the BitBricks in a 2-dimensional physical grouping, called Fusion Unit, as shown in Figure (a). The BitBricks logically fuse together at run-time to form Fused Processing Engines (F-PEs) that match the bitwidths required by the multiply-add operations of a DNN layer, as shown in the above Figure. Figures (b), (c), and (d) show three different ways of logically fusing BitBricks to form (b) 16 F-PEs that support ternary (binary); (c) four F-PEs that support mixed-bitwidths (2-bits for weights and 8-bits for inputs), (d) one F-PE that supports 8-bit operands, respectively.

The bitwidths of operands supported by a F-PE depend on the spatial arrangement of BitBricks fused together.

By varying the spatial arrangement of the four fused BitBricks, the F-PE can support 8-bit/2-bit, 4-bit/4-bit, and 2-bit/8-bit configurations for inputs/weights.

Finally, up to 16 BitBricks can fuse together to construct a single F-PE that can operate on 8-bit operands for the multiply-add operations (Figure (d)).

The BitBricks fuse together in powers of 2. That is, a single Fusion Unit with 16 BitBricks can offer 1, 2, 4, 8, and 16 F-PEs with varying operand bitwidths.

Dynamic composability of the Fusion Units at the bit level enables the architecture to expose the maximum possible level of parallelism with the finest granularity that matches the bitwidth of the DNN operands.

Bit Fusion Instruction Set

Bit Fusion uses a block-structured Instruction Set Architecture (ISA), wherein computations in the DNN are expressed as blocks of instructions with varying bitwidth requirements.

For more details, please refer to our paper (PDF, Bibtex) or visit our github github repository for a detailed ISA specification.